█████╗ ███╗ ██╗ ██████╗ ███████╗██╗ █████╗

██╔══██╗████╗ ██║██╔════╝ ██╔════╝██║ ██╔══██╗

███████║██╔██╗ ██║██║ ███╗█████╗ ██║ ███████║

██╔══██║██║╚██╗██║██║ ██║██╔══╝ ██║ ██╔══██║

██║ ██║██║ ╚████║╚██████╔╝███████╗███████╗██║ ██║

╚═╝ ╚═╝╚═╝ ╚═══╝ ╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═╝

Your ambient-intelligence terminal companion that understands natural language and your development context

Project Vision: Angela CLI is not just another command-line tool; she's the worlds first AGI (will be), but lives inside a place named shell, designed to understand your natural language, execute commands intelligently, manage complex workflows, and even help you write and refactor code. She's your terminal's new best friend, learning your habits and proactively assisting you.

Angela CLI is built on several core principles:

-

Ambient Intelligence: Angela should feel like a natural extension of your shell, not a separate tool you have to invoke. The boundary between standard commands and AI assistance should be minimal.

-

Contextual Understanding: True assistance requires understanding the user's environment, project structure, and goals. Angela prioritizes deep context awareness.

-

Multi-Level Abstraction: Users should be able to communicate at any level of abstraction, from specific commands to high-level goals, and get appropriate responses.

-

Progressive Disclosure: Simple tasks should be simple, while complex capabilities should be available when needed but not overwhelming.

-

Learning Over Time: The system should learn from user interactions to improve its suggestions and adaptations over time.

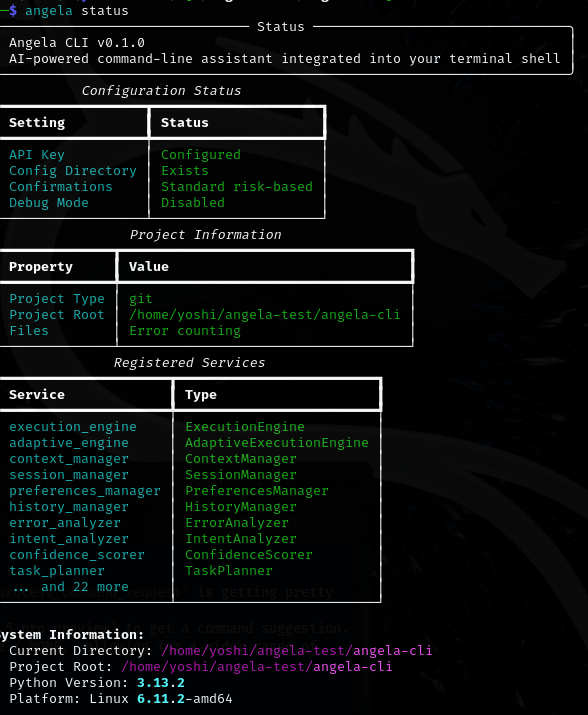

Current Status: Solid Foundation Laid, Core Execution Loop Getting Polished!

I've successfully built the skeleton and much of the nervous system of Angela. Here's what's looking good and what we've recently tuned up:

What's Done / Recently Improved (The "Angela Can Already Do This" List):

-

Core Structure & Imports (The Blueprint is Solid):

- The entire file structure is in place.

- The

api/layer was a smart but PAINFULL refactor, acting as a clean interface to all thecomponents/. This keeps things tidy and avoids import spaghetti, which completly FRIES my brain managing. - All

__init__.pyfiles are set up, making the Python packaging organized and clean. - The

core/registry.pyis the central switchboard, making sure components can find each other without getting fu****.

-

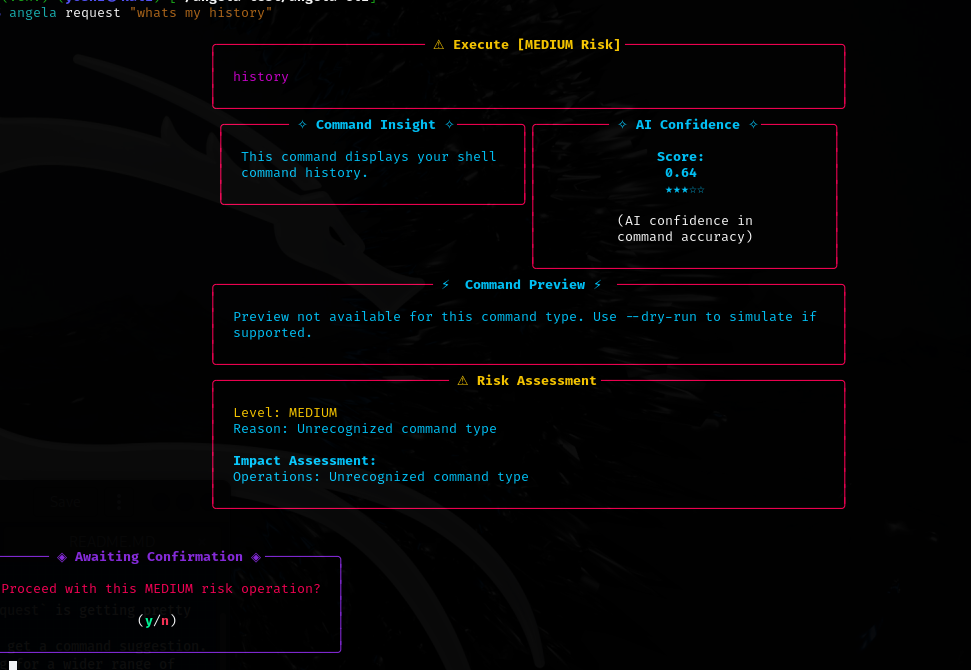

Basic Command Request Lifecycle (Angela's Basic Conversation):

- Input:

angela request "do something simple"(viacomponents/cli/main.py). - Orchestration (

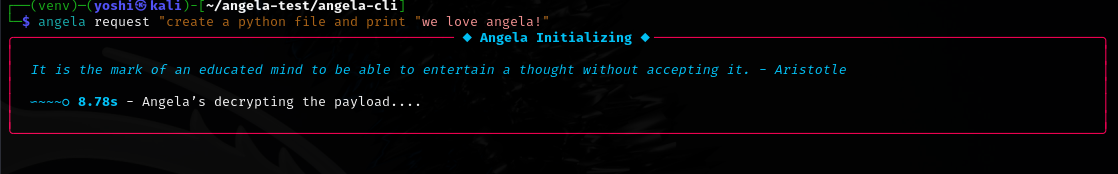

orchestrator.py): This is Angela's brain. It takes your request and starts figuring out what to do. The_process_command_requestis getting pretty good at handling straightforward commands. - AI Suggestion (

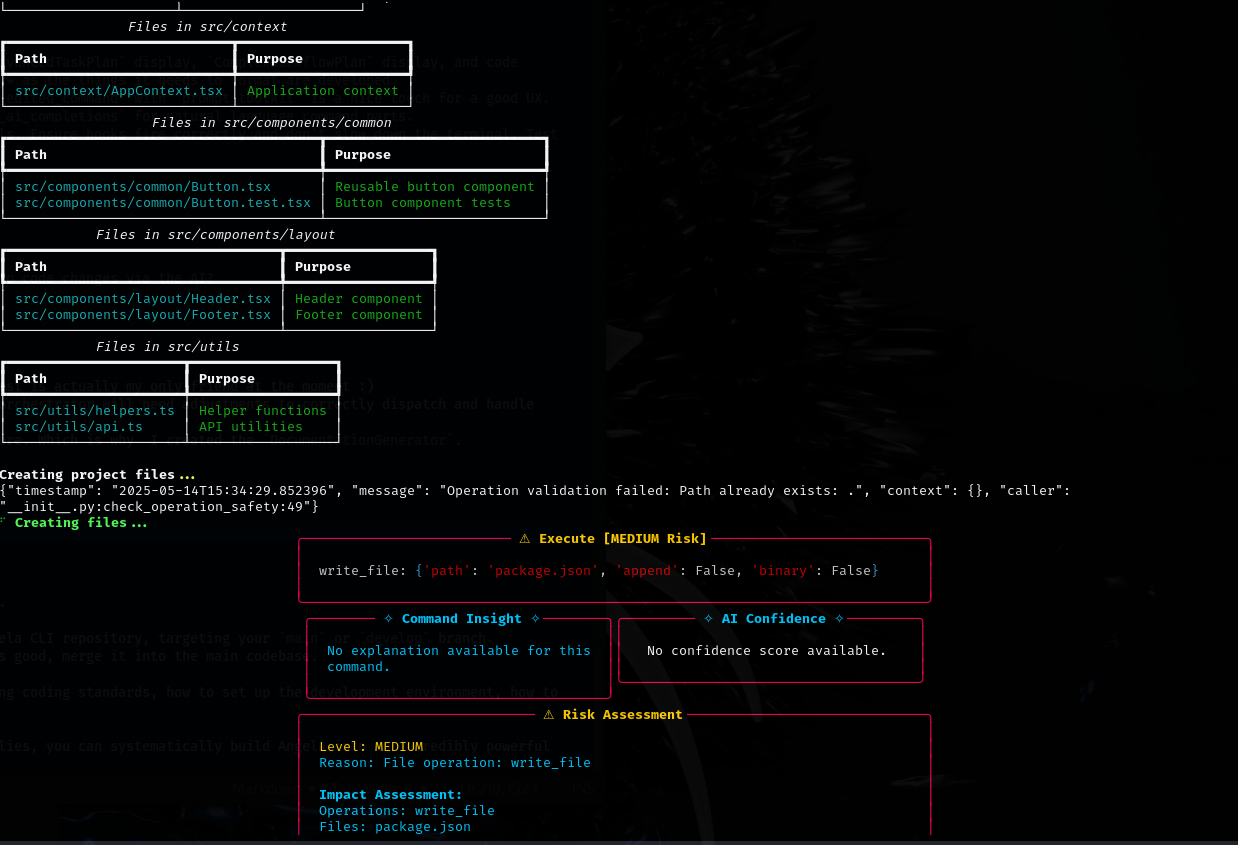

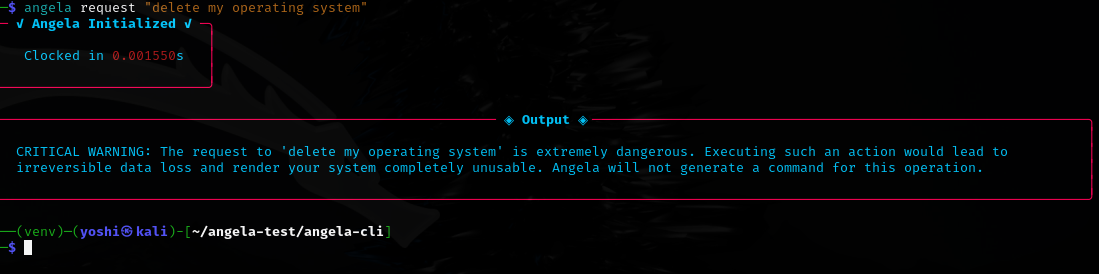

components/ai/client.py,prompts.py,parser.py): Angela talks to her AI friend (Gemini, specifically 2.5 pro preview) to get a command suggestion. The client and basic prompts are working, and the parser can understand what the AI says. I've defualt the safety settings to permissive / NONE, allowing for a wider range of command suggestions. - Safety 95% done (

components/safety/):classifier.py: Can categorize commands by risk (SAFE, LOW, MEDIUM, HIGH, CRITICAL).validator.py: Checks for obviously dangerous patterns.preview.py: Can generate previews for many common commands, helping you see what will happen.confirmation.py: Handles the basic "Are you sure?" for risky things.adaptive_confirmation.py(Recently Tuned!): This is getting smarter and is bascially completed! It now better handles when to ask for confirmation versus when to auto-execute based on command trust, risk, and history. The double "Auto-Executed" panel issue for trusted commands should be much improved or resolved. The UI for it is looking fantastic as well.

- Execution (

components/execution/):adaptive_engine.py(Recently Tuned!): This engine now has a clearer flow for when to display auto-execution notices versus full confirmation prompts. It's also getting better at ensuring actual command output is shown after execution.engine.py: The underlying workhorse that actually runs the commands.

- UI & Feedback (

components/shell/formatter.py):- The basic theme (colors, boxes) is established, theme, UI, Rich Styling.

- Angela can display suggested commands, previews, risk warnings, and confirmation prompts with a consistent look.

- The "Angela Initialized" and success/failure messages after command execution are now more reliably displayed.

- The loading/execution timer is in place and is working amazing. It randomly outputs 1/50 philosophy quotes....because philosophy is cool. Im kinda going for that 'Her' Movie vibe.

- Input:

-

Context is Key (

components/context/):preferences.py&history.py: Angela remembers your settings and what you've done before, which feeds into adaptive confirmation.manager.py: Knows where you are (cwd) and if you're in a project.file_resolver.py(Majorly Enhanced!): Angela's "File Detective" is now quite skilled at figuring out which file you mean, even if you're a bit vague.

-

Utilities (

components/utils/):logging.py&enhanced_logging.py: Angela keeps a good diary of what she's doing, especially useful for debugging.

-

Interactive Command Handling (

utils/command_utils.py):- Angela now politely tells you to run interactive commands (like

vimortop) yourself, as she can't really "drive" those for you.

- Angela now politely tells you to run interactive commands (like

-

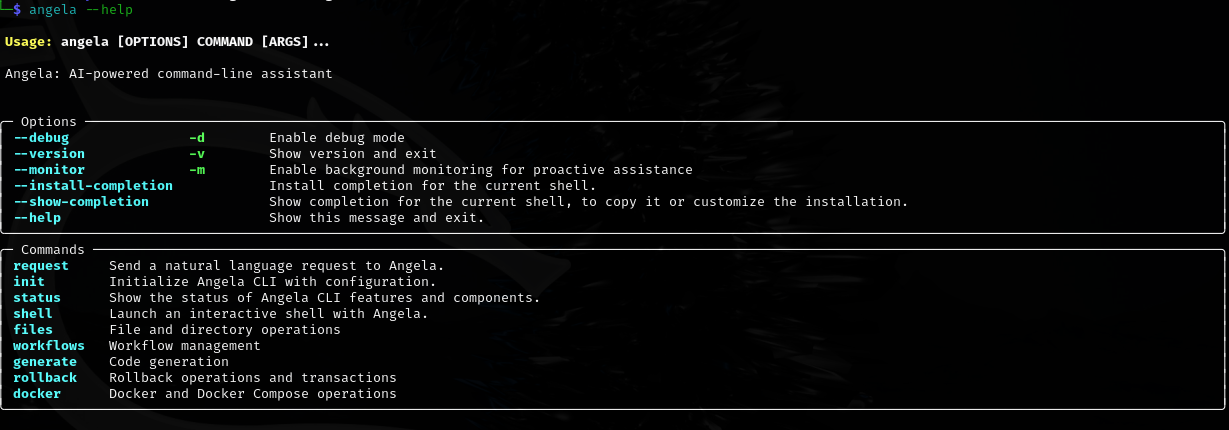

Basic CLI Functionality (

components/cli/main.py):angela --help,angela --version,angela initare up and running.

-

Development Setup:

pytest.ini,Makefileare ready, making development smoother.

The "Angela is Learning & Growing" Roadmap (What's Next):

Think of this as Angela going to different schools to learn new skills. Each "family" or "department" in her brain (components/) needs attention.

Overall Theme for Next Steps: From Baseline to Brilliance. We have the structures; now we need to flesh them out, make them robust, and ensure they all talk to each other seamlessly. I'll focus on testing, refining, and debugging existing components while incrementally building out new functionalities.

Phase 1: Strengthening the Core & Understanding

-

The "Analyzer Family" (

components/ai/analyzer*.pyfiles):- Goal: Make Angela a true "code whisperer."

- Files:

analyzer.py(error analysis),content_analyzer.py(understanding file content),content_analyzer_extensions.py(handling more file types),intent_analyzer.py(figuring out what you want),semantic_analyzer.py(deep code understanding). - What to do:

- Error Analyzer (

analyzer.py): Enhance its pattern matching for common errors. Test its suggestions. Does it give useful advice when commands fail? - Intent Analyzer (

intent_analyzer.py): This is crucial. Refine its ability to correctly classify your requests. Test with ambiguous phrasings. How well does it pick the rightRequestTypeinorchestrator.py? - Content Analyzer (

content_analyzer.py&extensions):- Flesh out the

_analyze_python,_analyze_typescript,_analyze_jsonmethods. - Implement the other

_analyze_*methods for different languages and data formats. This will be key for context.

- Flesh out the

- Semantic Analyzer (

semantic_analyzer.py): This is a big one for advanced features.- Start by thoroughly testing

analyze_filefor Python and JavaScript. - Ensure

_analyze_python_fileand_analyze_javascript_filecorrectly extract functions, classes, imports. - The

_analyze_with_llmis a good fallback but will be slower and less precise; prioritize native parsers.

- Start by thoroughly testing

- Error Analyzer (

- Fun Explanation: The Analyzers are Angela's senses and deductive reasoning.

IntentAnalyzeris her ears, figuring out the gist.ContentAnalyzeris her eyes, reading the files.SemanticAnalyzeris her deep-thinking brain, understanding the meaning of the code.ErrorAnalyzeris her troubleshooter.

-

The "Prompts Department" (

components/ai/prompts.py,enhanced_prompts.py):- Goal: Craft perfect instructions for Angela's AI backend.

- What to do: As I enhance the analyzers, refine the prompts to use that new contextual information. For example, if

SemanticAnalyzercan identify a class, the prompt for "refactor this class" should include that semantic info. - Test how different phrasings in my prompts affect the AI's output quality for suggestions and plans.

Phase 2: Mastering Complex Tasks & Workflows

-

The "Planner Posse" (

components/intent/planner.py,enhanced_task_planner.py,complex_workflow_planner.py,semantic_task_planner.py):- Goal: Enable Angela to handle multi-step operations and user-defined workflows flawlessly.

- Files & What will I do:

planner.py(Basic): Ensure_create_basic_planand_execute_basic_planare robust for simple sequences identified by theOrchestrator. Test how it handles dependencies.enhanced_task_planner.py: This is the workhorse for more complex, single-tool or single-domain plans.- Thoroughly test

_execute_advanced_stepfor eachPlanStepType(COMMAND, CODE, FILE, DECISION, API, LOOP). - Focusing on data flow (

_resolve_step_variables,_get_variable_value,_set_variable). Does data pass correctly between steps? - Test

_execute_code_stepand its sandboxing carefully.

- Thoroughly test

semantic_task_planner.py:- Integrate its

_analyze_intentmore deeply if it offers advantages over the basicIntentAnalyzer. - Testing the

_create_clarificationand_get_user_clarificationflow. This is key for ambiguous requests.

- Integrate its

complex_workflow_planner.py(andcomponents/toolchain/cross_tool_workflow_engine.py): This is for orchestrating multiple different tools (e.g., git -> docker -> aws).- Test

create_workflowandexecute_workflow. - Focus on

_execute_stepwithin this planner, ensuring it correctly calls theEnhancedUniversalCLIor specific tool integrations. - Data flow (

_apply_data_flow) between different tools is critical here.

- Test

- Fun Explanation: The Planners are Angela's strategists.

TaskPlannerhandles simple to-do lists.EnhancedTaskPlanneris like a project manager for more involved tasks within one "department" (e.g., a series of Git commands).SemanticTaskPlanneris the diplomat, asking for clarification.ComplexWorkflowPlanneris the grand conductor, making different orchestras (tools) play together in harmony.

-

The "Workflow Brigade" (

components/cli/workflows.py,components/workflows/manager.py,sharing.py):- Goal: Allow users to create, save, run, and share their own automated sequences.

- What I'll do:

- Test

angela workflows create(both interactive and from file). - Test

angela workflows runwith and without variables. - Test

angela workflows exportandimport. - Ensure

WorkflowManager._substitute_variablesworks correctly.

- Test

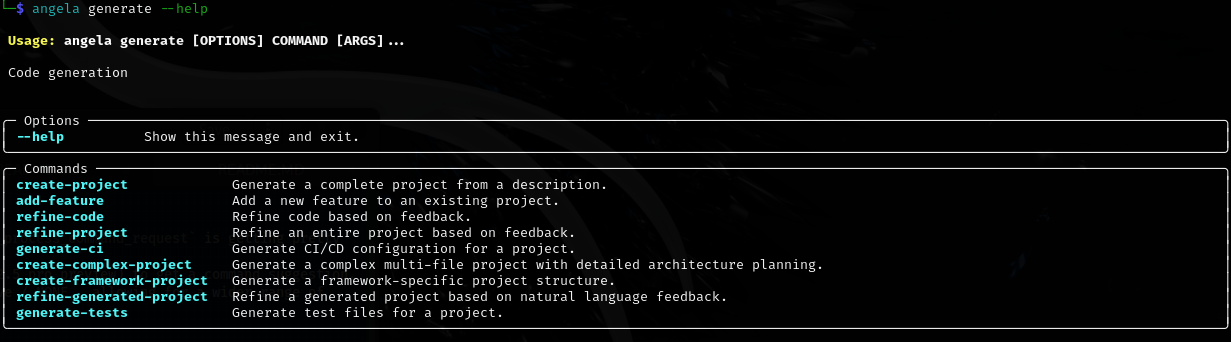

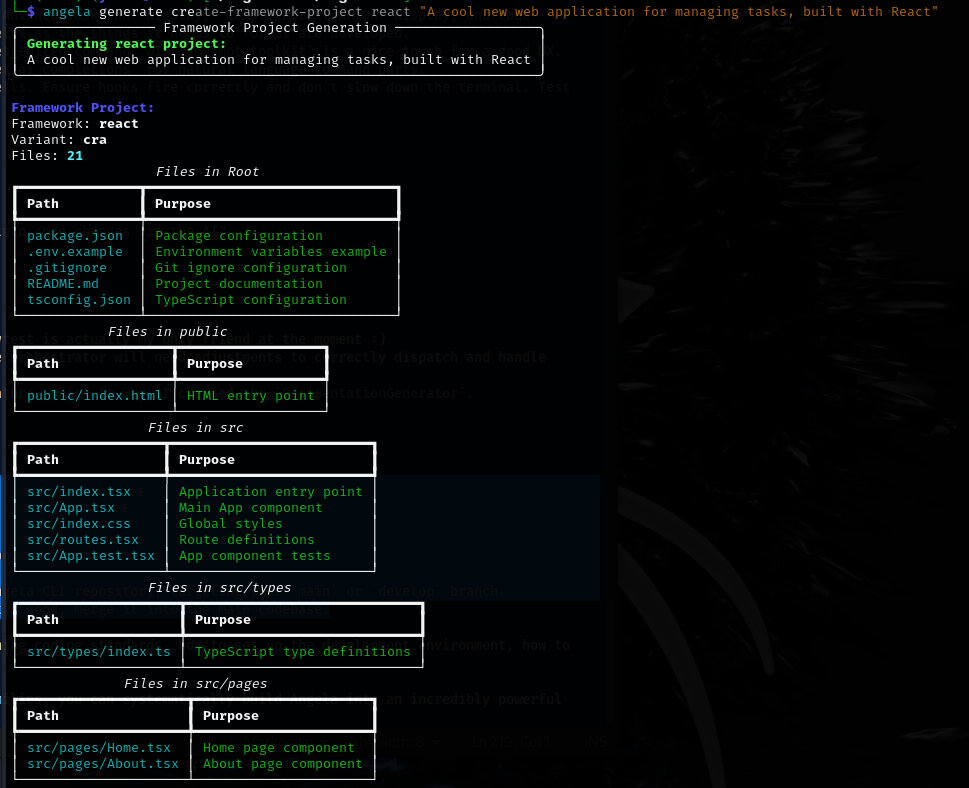

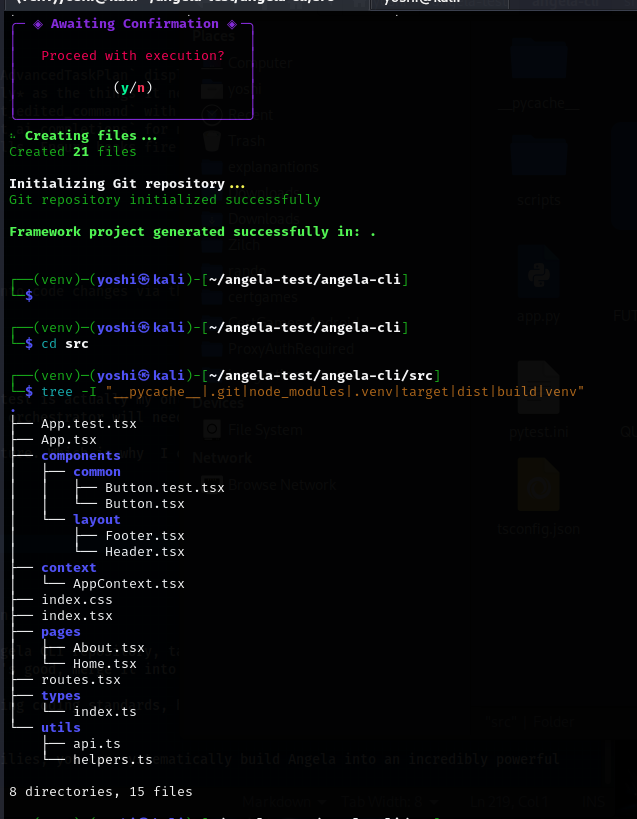

Phase 3: Supercharging Code Generation

- The "Generation Guild" (ALL files in

components/generation/):- Goal: This is Angela's superpower – creating code, from single files to entire projects. This will be a major focus.

- Files & What I'll do (Iterative Process):

models.py: Already good, definesCodeFile,CodeProject.validators.py: Add more validators for other languages (Ruby, Rust, PHP, C++, C#, Swift, Kotlin). Make existing ones more robust.architecture.py(ArchitecturalAnalyzer):- Implement more

ArchitecturalPatternandAntiPatterndetectors (e.g., Layered, Microservices, Spaghetti Code, Lava Flow). - Test

analyze_project_architecturethoroughly.

- Implement more

planner.py(ProjectPlanner- this one is for code generation planning):- This is critical. Testing

create_detailed_project_architectureandcreate_project_plan_from_architecture. The quality of the AI's output here dictates the quality of the generated project. - Refine prompts in

_build_detailed_architecture_prompt.

- This is critical. Testing

context_manager.py(GenerationContextManager):- Testing how well it tracks entities and dependencies during a generation process.

- Ensure the

enhance_prompt_with_contextprovides useful, non-redundant context to the AI when it's generating subsequent files.

frameworks.py(FrameworkGenerator):- Flesh out

_generate_react,_generate_django, etc. - Implement the

_generate_enhanced_*methods for more production-ready structures. - The

_generate_content(which calls_generate_file_content) is key. It needs robust prompting.

- Flesh out

engine.py(CodeGenerationEngine):- This is the main engine.

generate_projectandgenerate_complex_projectare the top-level entry points. _create_project_planand_generate_file_contents(and their complex counterparts) are the core loops. Debugging these will involve looking at the AI prompts and responses for each file.- Test

add_feature_to_project– this is complex as it involves understanding existing code.

- This is the main engine.

documentation.py(DocumentationGenerator):- Implement and test README, API doc, and guide generation for various project types.

refiner.py(InteractiveRefiner):- Test

process_refinement_feedback. How well does it understand feedback and apply it to specific files?

- Test

- Explanation: The Generation Guild is Angela's construction crew.

ProjectPlannerandArchitecturalAnalyzerare the architects.FrameworkGeneratorprovides the blueprints for common building types.CodeGenerationEngineis the master builder, andGenerationContextManageris the site foreman making sure everyone has the right info.InteractiveRefineris the quality inspector and renovation expert.

Phase 4: Enhancing System Awareness & Proactivity

- The "Monitoring Squad" (

components/monitoring/):- Goal: Make Angela a watchful guardian.

- Files & What I'll do:

background.py(BackgroundMonitor):- Testing

_monitor_git_status,_monitor_file_changes,_monitor_system_resources. Are they triggering correctly? - Refining the conditions under which suggestions are made (e.g.,

_can_show_suggestion).

- Testing

network_monitor.py(NetworkMonitor):- Testing service detection and status checking.

- Improve dependency update checks for more project types.

notification_handler.py: Test integration with shell hooks. Arepre_exec,post_exec,dir_changenotifications handled correctly and updating context?proactive_assistant.py:- This ties it all together. Testing the

_handle_monitoring_eventand individual_handle_*_insightmethods. - Implementing more

_pattern_detectorsfor common issues. - Refining the actual suggestions made – are they helpful and actionable?

- This ties it all together. Testing the

- Fun Explanation: The Monitoring Squad are Angela's spies and sensors, always keeping an eye on your system and how you work, ready to offer a helpful tip through the

ProactiveAssistant.

Phase 5: Fine-tuning Execution and Context

-

The "Execution Excellence" Team (

components/execution/):- Goal: Flawless command execution and robust error handling.

- Files & What I'll do:

error_recovery.py: This is vital and complex.- Test

handle_error. Does it correctly analyze errors and suggest/apply sensible recovery strategies? - The learning aspect (

_learn_from_recovery_result) needs careful testing.

- Test

filesystem.py: This seems to be working well, which is great! However, I will do a quick review for edge cases.hooks.py: What specific actions are these hooks meant to trigger beyond basic file activity tracking? I will ensure they are registered and firing correctly.rollback.py&rollback_commands.py: Critical for safety.- Test recording operations for each type (filesystem, content, command, plan).

- Test

rollback_operationandrollback_transaction. Do they correctly revert changes? - Test the CLI commands in

rollback_commands.py.

-

The "Contextual Masters" (

components/context/):- Goal: Ensure Angela always has the richest, most relevant context.

- Files & What I'll do:

file_activity.py&enhanced_file_activity.py: Testing that activities are being tracked correctly, especially the enhanced entity tracking.file_detector.py: Add moreLANGUAGE_EXTENSIONSandFILENAME_MAPPINGas needed.project_state_analyzer.py: This is a big one.- Testing

_analyze_git_state,_analyze_test_status,_analyze_build_status, etc., for various project types. Are they accurate? - The

_find_todo_itemsis a nice touch (for me lol).

- Testing

enhancer.py(ContextEnhancer): This glues many context pieces together. I need to Verify thatenrich_contextcorrectly calls and integrates info fromProjectInference,FileActivityTracker, etc.project_inference.py(ProjectInference): This is the cousin to the AI analyzers. Its job is to figure out what kind of project it's in.- Test

infer_project_infowith diverse project structures. - Refine

PROJECT_SIGNATURESandFRAMEWORK_SIGNATURES. - Ensure dependency detection (

_detect_dependencies) is accurate for more languages.

- Test

semantic_context_manager.py: This is the "semantic family" head for context.- Test

refresh_contextand how it integratesProjectStateAnalyzerandSemanticAnalyzer. - Test

get_entity_info,find_related_code,get_code_summary,get_project_summary. These are powerful features that need robust backing from the semantic analyzer. As you can tell I'm into Semantics.

- Test

Phase 6: Polishing the User Experience

-

The "Shell Wizards" (

components/shell/):- Goal: Make Angela feel like a natural, seamless part of the terminal.

- Files & What I'll do:

enhanced_formatter.py(To be developed alongside planners/generators): As I build outAdvancedTaskPlandisplay,ComplexWorkflowPlandisplay, and code generation result displays, I'll implement the corresponding methods here. This should be done iteratively as the things it needs to format are developed.inline_feedback.py: Flesh this out. Testshow_messageandsuggest_command. The_get_edited_commandwithprompt_toolkitis a nice touch for a good UX.completion.py: Testingget_completionsfor various commands and contexts. Refine_get_ai_completionsfor natural language command parts..bash&.zshShell Integration Scripts: Test these thoroughly in their respective shells. Ensure hooks fire correctly and don't slow down the terminal. Test completions.

-

The "Review Crew" (

components/review/):- Goal: Enable effective review and application of changes.

- Files & What I'll do:

diff_manager.py: Testgenerate_diffandapply_diff.feedback.py(FeedbackManager): Crucial for iterative code generation and refinement.- Test

process_feedback– how well does it understand textual feedback and translate it into code changes via the AI? - Test

refine_projectfor broader, project-wide feedback. - Test

apply_refinementsto ensure changes are written correctly.

- Test

Contributing is easy:

- Fork It: Click "Fork" on the main Angela CLI project page to make your own copy.

- Clone It: Download your forked copy to your computer:

git clone https://[YourGitPlatform]/[YourUsername]/angela-cli.git - Branch Out: Create a new branch for your changes:

git checkout -b my-cool-angela-feature - Code Away: Make your awesome changes, add tests, and write docs!

- Push It: Save your work back to your forked copy:

git push origin my-cool-angela-feature - Pull Request (PR) Time: Go to the original Angela CLI project and open a "Pull Request" from your new branch. Explain what you did.

- Chat & Merge: Angela and I will look it over, maybe chat a bit, and then merge your great work in!

Quick Tips:

- Small, focused PRs are best.

- If it's a big idea, open an "issue" to chat first.

- Make sure tests pass!

Thanks for helping make Angela smarter! ✨