-

Notifications

You must be signed in to change notification settings - Fork 12.1k

Smooth Sampling / Quadratic Sampling support #6445

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: master

Are you sure you want to change the base?

Conversation

|

I would also like to point out that Dynamic Temperature is unrelated from this, and it is not required to use the quadratic transformation, they are just combined in one "entropy" function for the sake of not creating an additional function. |

|

Backported the "curve", which is the 2nd hyperparameter (smoothing_curve) There might be a more intuitive or natural way to implement this, but people seemed to appreciate this addition to the original quad sampling, so I'm adding it as is. |

"temp": 1.5,

"dynatemp_range": 1.4,

"smoothing_factor": 0.2,

"smoothing_curve": 1.5,

"samplers_sequence": "kt",And it just works, no major problems with repetition (even though penalties are switched off). One question: dynatemp (and smoothing now) are not currently used with Mirostat. Would it be beneficial to use them together, theoretically speaking? I tried it once, but Mirostat was difficult to work with originally, and is difficult to compare now. |

|

I know it's late to change it, but I really don't like how there's a huge discontinuity in behaviour between 0.0 and small positive values. None of the other samplers are like this, and I expect it will cause confusion to a lot of users that are mentally used to "it's close to the off value, so it means small effects right?". |

|

It would be nice to have a separate |

I can't think of a mathematically identical way to scale the logits that wouldn't require bounding to some arbritary value / taking away control from the user unfortunately.

I imagine @ggerganov would probably prefer if I did this before it can be merged, since the entropy DynaTemp is distinct from this technique. Though, it would probably require a new case switch / new position in the sampler order if we want temperature + this to be "stackable". How does that sound? |

|

In my opinion, we already have more than enough samplers implemented. The interface allows user code to implement custom sampling techniques if more is needed. Still, if we want to extend further, we can do it. But we should write some unit tests also. Maybe add some tests for this functionality and merge it for now? |

|

Have you tried using It's intrinsically linked to the softmax (aka multinational logistic regression) function and the only scaling function which is invariant to translation in the same way as the logits are (meaning you don't need to subtract from the maximum logit as the ratio of scale factors is constant if the gap between logits remains the same). If you reparameterise the temperature as

If you do 'center' them then interestingly Also IIRC, this idea is equivalent to multiplying the final probability values by a fraction of their negative logarithms (ie: it can be applied post-softmax), and this function has a special name (which I can't seem to remember nor find atm) which is used in actuarial models (IIRC, something to do with 'survival functions' where you want to penalise "weaker" values more and in a nonlinear way). So the general transformation is:

which again shows the similarly to a polynomial transformation. Another alternate parameterisation would be https://en.wikipedia.org/wiki/Generalized_normal_distribution |

|

Hows this PR? Is there a positive intent now to start merging this? |

|

Hi, now that DRY was merged, is it possible to continue working on this pr? |

Smooth Sampling / Quadratic Sampling

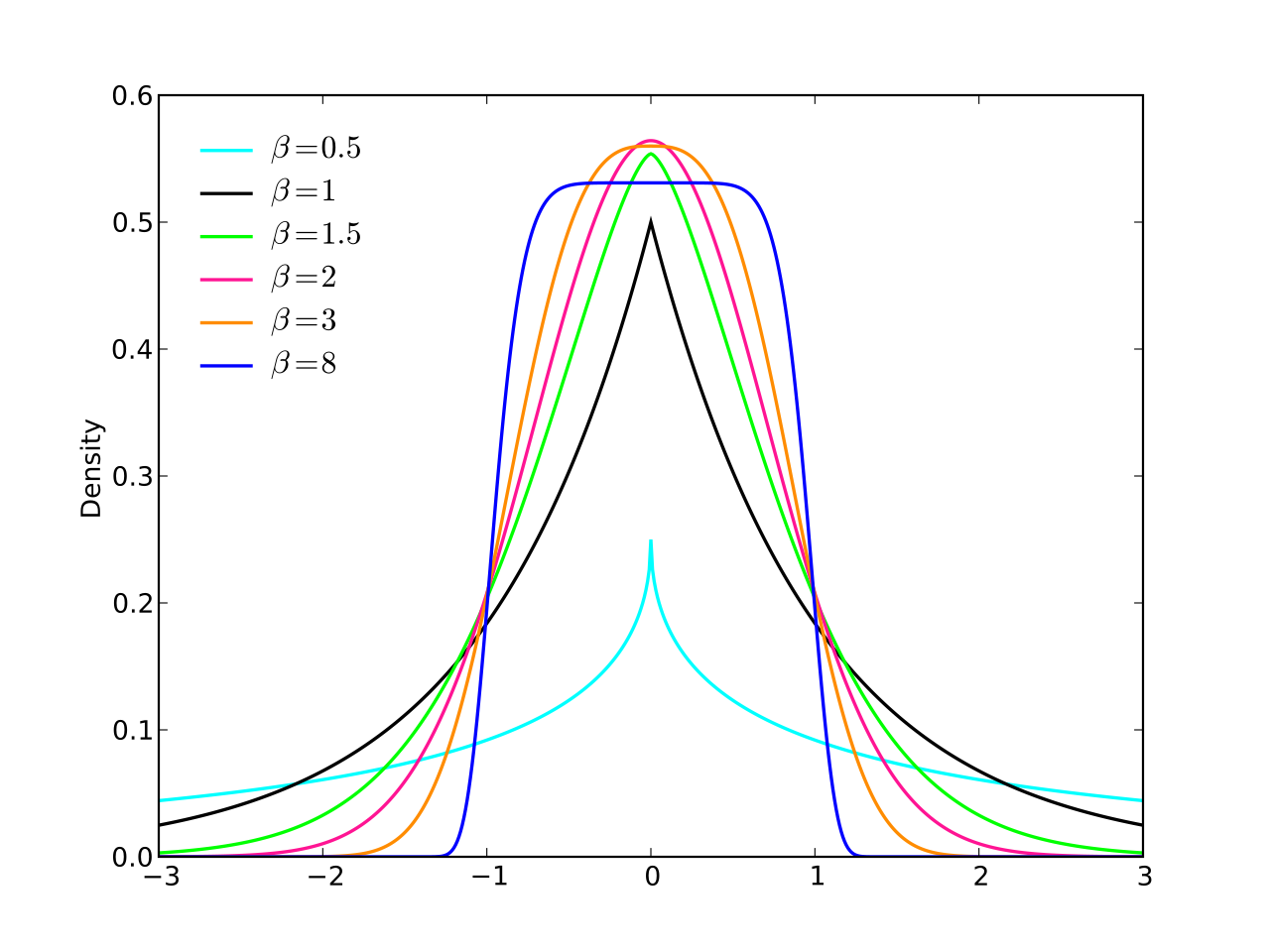

This sampling method differs from the truncation samplers (Top K, Top P, Min P) or traditional Temperature sampling, since we are changing the raw scores in a non-linear fashion.

With this approach, we tweak the original logit scores using a quadratic transformation, based on each score's distance from the topmost logit.

You can view this as an alternative to Temperature that scales differently (though they are not mutually exclusive); the idea is that we can make the good choices more evenly probable while still punishing extreme outlier tokens.

Graph demonstration with voiceover (excuse how I sound here): https://files.catbox.moe/x804ia.mp4

This has been implemented in koboldcpp and text-generation-webui for a while now already, and it recieved some praise there.

Considering that, I wanted to backport it to llama.cpp for use with the server.

How is this meaningfully different from Temperature?

The interesting element is that even the higher, more deterministic values will avoid biasing towards the topmost token if there's a group of similar probability tokens at the top, which meaningfully differs from lower Temperature having a linear skew.

So instead of the top two tokens that were originally 51/49 becoming more skewed towards 60/40 with a temperature of say, 0.3, it would look more like a 50/50 dead even split with a high "smoothing factor" value, while still nullifying the rest of the distribution's low probability outliers.

Likewise, low values will make the top probabilities less deterministic and are a decent fit for creative writing, as low probability outliers are still being reduced "smoothly" without any specific cutoffs.

How do I scale it?

"0" turns off the sampler entirely. 0.01 would be extremely close to a flat distribution (so extremely unhinged like higher Temperature would be).

You can, in theory, scale to arbritarily large values from here, where it will become gradually more and more deterministic.

Consider 10.0 as a reasonable "max", but that's just an arbitrary limit.

"Smoothing Factor" values of 0.2-0.3 are generally good for creative writing.

Preview

Here is a useful webpage that allows you to visualize how the distribution changes in response to this sampler:

https://artefact2.github.io/llm-sampling/index.xhtml

Here is the text-generation-webui PR where I first implemented this: oobabooga/text-generation-webui#5403